15 Inter-Rater Reliability Examples (2026) - Helpful Professor

Jan 3, 2024 · There are two common methods of assessing inter-rater reliability: percent agreement and Cohen’s Kappa. Percent agreement involves simply tallying the percentage of times two raters …

Inter-Rater Reliability: Definition, Examples & Assessing

Examples of these ratings include the following: Inspectors rate parts using a binary pass/fail system. Judges give ordinal scores of 1 – 10 for ice skaters. Doctors diagnose diseases using a categorical …

Inter-Rater Reliability - Methods, Examples and Formulas

Mar 25, 2024 · High inter-rater reliability ensures that the measurement process is objective and minimizes bias, enhancing the credibility of the research findings. This article explores the concept of …

What is Inter-rater Reliability? (Definition & Example) - Statology

Feb 27, 2021 · This tutorial provides an explanation of inter-rater reliability, including a formal definition and several examples.

What is Inter-rater Reliability: Definition, Cohen’s Kappa & more

Inter-rater reliability helps teams dealing with such data evaluate how consistent expert judgments really are. For example, in a recent study focused on retinal OCT scans, two raters labeled 500 images.

Inter-rater reliability - Wikipedia

In statistics, inter-rater reliability (also called by various similar names, such as inter-rater agreement, inter-rater concordance, inter-observer reliability, inter-coder reliability, and so on) is the degree of …

Inter-rater Reliability IRR: Definition, Calculation

Inter-rater reliability is the level of agreement between raters or judges. If everyone agrees, IRR is 1 (or 100%) and if everyone disagrees, IRR is 0 (0%). Several methods exist for calculating IRR, from the …

What Is Inter-Rater Reliability? | Definition & Examples - QuillBot

Oct 24, 2025 · Inter-rater reliability is the degree of agreement or consistency between two or more raters evaluating the same phenomenon, behavior, or data. In research, it plays a crucial role in …

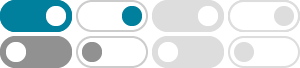

Inter-Rater Reliability vs Agreement | Assessment Systems

Sep 29, 2022 · Inter-rater reliability and inter-rater agreement are important concepts in certain psychometric situations. For many assessments, there is never any encounter with raters, but there …

Inter-Rater Reliability - SAGE Publications Inc

Inter-rater reliability, which is sometimes referred to as interobserver reliability (these terms can be used interchangeably), is the degree to which different raters or judges make consistent estimates of the …